Download Confluent Certified Developer for Apache Kafka Certification Examination.CCDAK.PracticeTest.2025-05-12.79q.vcex

| Vendor: | Confluent |

| Exam Code: | CCDAK |

| Exam Name: | Confluent Certified Developer for Apache Kafka Certification Examination |

| Date: | May 12, 2025 |

| File Size: | 46 KB |

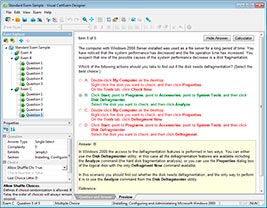

How to open VCEX files?

Files with VCEX extension can be opened by ProfExam Simulator.

Discount: 20%

Demo Questions

Question 1

A client connects to a broker in the cluster and sends a fetch request for a partition in a topic. It gets an exception Not Leader For Partition Exception in the response. How does client handle this situation?

- Get the Broker id from Zookeeper that is hosting the leader replica and send request to it

- Send metadata request to the same broker for the topic and select the broker hosting the leader replica

- Send metadata request to Zookeeper for the topic and select the broker hosting the leader replica

- Send fetch request to each Broker in the cluster

Correct answer: B

Explanation:

In case the consumer has the wrong leader of a partition, it will issue a metadata request. The Metadata request can be handled by any node, so clients know afterwards which broker are the designated leader for the topic partitions. Produce and consume requests can only be sent to the node hosting partition leader. In case the consumer has the wrong leader of a partition, it will issue a metadata request. The Metadata request can be handled by any node, so clients know afterwards which broker are the designated leader for the topic partitions. Produce and consume requests can only be sent to the node hosting partition leader.

Question 2

To continuously export data from Kafka into a target database, I should use

- Kafka Producer

- Kafka Streams

- Kafka Connect Sink

- Kafka Connect Source

Correct answer: C

Explanation:

Kafka Connect Sink is used to export data from Kafka to external databases and Kafka Connect Source is used to import from external databases into Kafka. Kafka Connect Sink is used to export data from Kafka to external databases and Kafka Connect Source is used to import from external databases into Kafka.

Question 3

A consumer starts and has auto.offset.reset=none, and the topic partition currently has data for offsets going from 45 to 2311. The consumer group has committed the offset 10 for the topic before. Where will the consumer read from?

- offset 45

- offset 10

- it will crash

- offset 2311

Correct answer: C

Explanation:

auto.offset.reset=none means that the consumer will crash if the offsets it's recovering from have been deleted from Kafka, which is the case here, as 10 < 45 auto.offset.reset=none means that the consumer will crash if the offsets it's recovering from have been deleted from Kafka, which is the case here, as 10 < 45

Question 4

What exceptions may be caught by the following producer? (select two)

ProducerRecord<String, String> record =

new ProducerRecord<>("topic1", "key1", "value1"); try {

producer.send(record);

} catch (Exception e) { e.printStackTrace();

}

- BrokerNotAvailableException

- SerializationException

- InvalidPartitionsException

- BufferExhaustedException

Correct answer: BD

Explanation:

These are the client side exceptions that may be encountered before message is sent to the broker, and before a future is returned by the .send() method. These are the client side exceptions that may be encountered before message is sent to the broker, and before a future is returned by the .send() method.

Question 5

When using the Confluent Kafka Distribution, where does the schema registry reside?

- As a separate JVM component

- As an in-memory plugin on your Zookeeper cluster

- As an in-memory plugin on your Kafka Brokers

- As an in-memory plugin on your Kafka Connect Workers

Correct answer: A

Explanation:

Schema registry is a separate application that provides RESTful interface for storing and retrieving Avro schemas. Schema registry is a separate application that provides RESTful interface for storing and retrieving Avro schemas.

Question 6

What Java library is KSQL based on?

- Kafka Streams

- REST Proxy

- Schema Registry

- Kafka Connect

Correct answer: A

Explanation:

KSQL is based on Kafka Streams and allows you to express transformations in the SQL language that get automatically converted to a Kafka Streams program in the backend KSQL is based on Kafka Streams and allows you to express transformations in the SQL language that get automatically converted to a Kafka Streams program in the backend

Question 7

How will you find out all the partitions where one or more of the replicas for the partition are not in-sync with the leader?

- kafka-topics.sh --bootstrap-server localhost:9092 --describe --unavailable- partitions

- kafka-topics.sh --zookeeper localhost:2181 --describe --unavailable- partitions

- kafka-topics.sh --broker-list localhost:9092 --describe --under-replicated-partitions

- kafka-topics.sh --zookeeper localhost:2181 --describe --under-replicated-partitions

Correct answer: D

Question 8

What is a generic unique id that I can use for messages I receive from a consumer?

- topic + partition + timestamp

- topic + partition + offset

- topic + timestamp

Correct answer: B

Explanation:

(Topic,Partition,Offset) uniquely identifies a message in Kafka (Topic,Partition,Offset) uniquely identifies a message in Kafka

Question 9

Your streams application is reading from an input topic that has 5 partitions. You run 5 instances of your application, each with num.streams.threads set to 5. How many stream tasks will be created and how many will be active?

- 5 created, 1 active

- 5 created, 5 active

- 25 created, 25 active

- 25 created, 5 active

Correct answer: D

Explanation:

One partition is assigned a thread, so only 5 will be active, and 25 threads (i.e. tasks) will be created One partition is assigned a thread, so only 5 will be active, and 25 threads (i.e. tasks) will be created

Question 10

The kafka-console-consumer CLI, when used with the default options

- uses a random group id

- always uses the same group id

- does not use a group id

Correct answer: A

Explanation:

If a group is not specified, the kafka-console-consumer generates a random consumer group. If a group is not specified, the kafka-console-consumer generates a random consumer group.

Question 11

A Kafka producer application wants to send log messages to a topic that does not include any key. What are the properties that are mandatory to configure for the producer configuration? (select three)

- bootstrap.servers

- partition

- key.serializer

- value.serializer

- key

- value

Correct answer: ACD

Explanation:

Both key and value serializer are mandatory. Both key and value serializer are mandatory.

HOW TO OPEN VCE FILES

Use VCE Exam Simulator to open VCE files

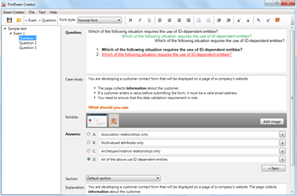

HOW TO OPEN VCEX AND EXAM FILES

Use ProfExam Simulator to open VCEX and EXAM files

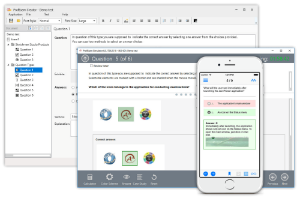

ProfExam at a 20% markdown

You have the opportunity to purchase ProfExam at a 20% reduced price

Get Now!